In November 2022, Malaysia held its 15th general election, ending in a polarised electorate as well as a digital space strewn with hate speech, disinformation, and misinformation. Recognising the need for the public to be educated on the dangers of hate speech, especially during the elections, the Centre for Independent Journalism (CIJ) Malaysia, in collaboration with Universiti Sains Malaysia, Universiti Malaysia Sabah and University of Nottingham Malaysia, launched Say No to Hate Speech, a hate speech monitoring tool. The project is now gearing up to monitor the next six state elections in Malaysia due to take place this year.

CIJ is a non-profit organisation that aspires to a society that is democratic, just and free where all people will enjoy free media and the freedom to express, seek and impart information. In Part II of our interview, we spoke to Wathshlah Naidu, CIJ’s executive director, and Lee Shook Fong, programme officer, to dive deeper into the nuances and challenges of hate speech, biases both algorithmic and human, as well the as the possibilities for civil society and citizens to combat hate speech.

This interview has been edited for length and clarity. You can read part one of this interview here.

Hate speech can be hard to define and is often contextual. Can you walk us through how you define hate speech for your tool?

WN: We partner with three universities in Malaysia – Universiti Sains Malaysia, Universiti Malaysia Sabah and the University of Nottingham Malaysia – to develop a framework for hate speech around these questions: how are we going to define hate speech? Do we take the legalistic approach as there is no universally accepted definition of hate speech? How are we going to track and measure them? We also conducted research and engaged with organisations such as the Centre for Information Technology and Development (CITAD) (Nigeria), Masyarakat Anti Fitnah Indonesia (MAFINDO), and Memo 98 (Slovakia) who have done similar projects. At the same time, we also looked at Article 19 and the Rabat Plan of Action to see how hate speech is defined.

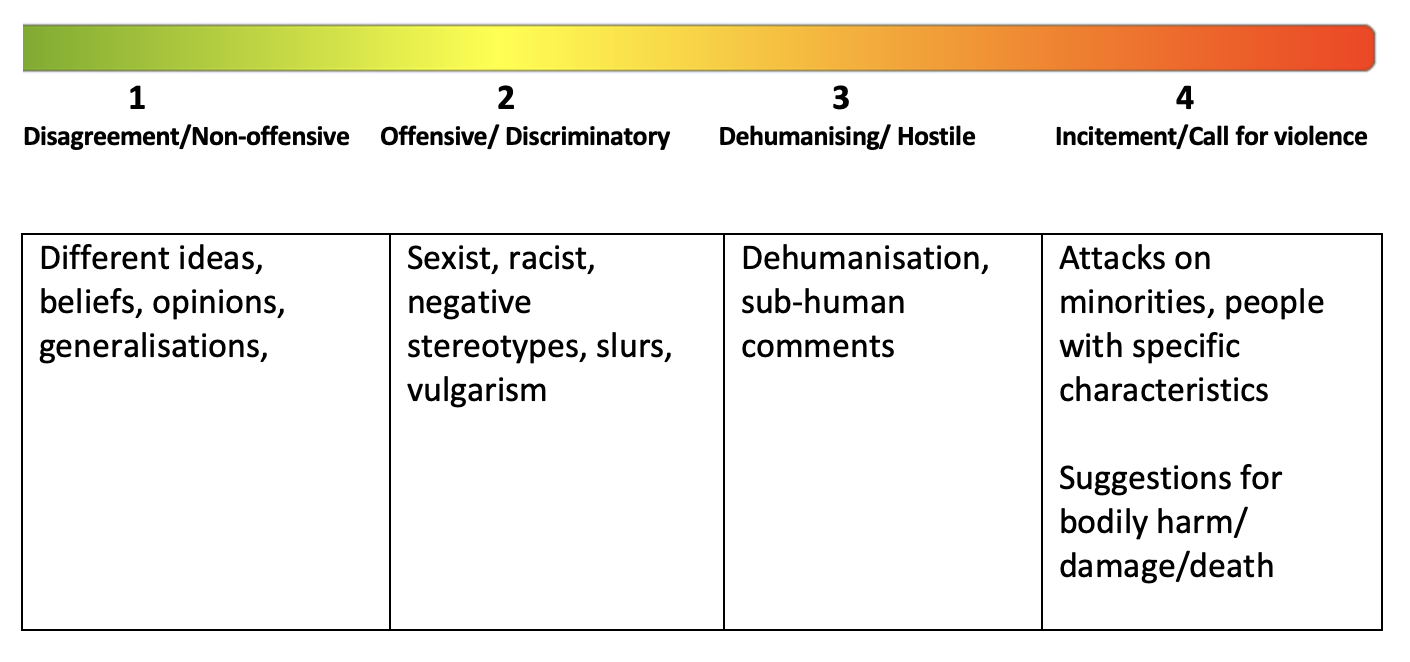

We then realised there is no one set of framework to adapt for our tool. We then developed our own severity scale and contextualised it within the social and political landscapes of Malaysia. The severity scale is as follows:

After developing the framework and severity scale, we then developed a set of keywords. It was also a challenge by itself as it is not as simple as just assigning one keyword as hate speech, or another as not. It also depends on many contexts such as the actor, their intent, and the level of engagement, for example. Recognising this, we developed all of these metrics to help us categorise the severity level. We then initiated a pilot to test the tool.

The tool also made use of algorithms to scrape data on identified issues and themes. Can you tell us more about how you developed the algorithm and the challenges around it, especially concerning algorithmic bias?

LSF: As part of the core work at CIJ is media monitoring, we made a crucial decision to develop the tool in a way it would belong to CIJ, and not with any other companies. That means whoever the developers we work with for this tool, they would understand that it is able to be transferred to other developers we might work with in the future.

We actively decided to go with a targeted approach – by targeting the actors as mentioned by Wathshlah – instead of broad scraping. We also furnished the developers with keywords in these three languages: Malay, English and Mandarin. They then used the keywords to scrape data and create templates based on the accounts identified. There was a lot of tweaking and fixing so we could have results across all actors, platforms and severity levels. At this point, we already have about 300 rules for automated tagging, but as with any tools that make use of algorithms, it is constantly learning and we are also constantly assessing. We also developed a set of rules to look at the behaviours of coordinated inauthentic behaviour (CIB), trolls and bots. We hope to be able to see more improvements as we work with the tool for the next six state elections in 2023.

WN: The issue of algorithms is a constant challenge because the machine is currently at [a nascent] stage. It will only get better as more information and data are fed. It can help us gain data, and that’s about it for now. We actively decided at this point that we do not want machine learning and algorithms to determine the severity scale, as they are still not equipped to do so. This is also why we do not fully rely on algorithms, and employ human monitors to have a check and balance in the process.

As such, our analysis is very crucial in putting out the full information. Numbers alone will not do, we also need to contextualise the stories found within the numbers.

LSF: One of our experts has this vision that if you keep feeding data to the tool, we will be able to rely less on human monitors. Having said that, I do not foresee this happening in the near future because even now with 300 rules, the algorithm still makes mistakes and is still learning. We are grateful that the developers are upfront about the processes and are continually assessing the tool.

You also employ some human monitors. What are their roles and how do you mitigate biases when it comes to them as well?

WN: We trained our human monitors to review, categorise and tag data according to the set of keywords. They also identify the severity scale of the hate speech and verify the authenticity of accounts on bot-checking sites.

The last part can get challenging because bots are becoming more sophisticated. They can now use real names, real profiles and photos. But upon closer inspection, you can gauge they are bots based on the date the account was set up, their followers, their following, their patterns of sharing and posting, the message and narrative, and many others. As such, we adopted the 3M process: the messenger, the message and the messaging, to look within these contexts.

We understand that all of us have some form of implicit biases – and this includes our human monitors – and it is almost impossible to be objective. What we do is we often have conversations and brainstorming sessions with our human monitors to reflect and attempt to lessen these biases as much as possible. We also work with the universities [mentioned earlier] to create some formula to determine the quality of the tagging work. As a final form of verification, we also carried out two sets of intercoder reliability tests to ensure consistency in our tagging: one to determine the severity levels, and another to determine the identification of CIBs.

Do you foresee other challenges in the future – aside from language, as mentioned – and especially with monitoring hate speech during the upcoming six state elections this year?

WN: Developing this tool requires a tremendous amount of resources – both human and financial – and I would say resources would be one of the anticipated challenges in the near future. This is especially since one of our plans this year is to also integrate this tool as an added value to our organisation, and not just a project we run during the elections.

We also understand the psychological and emotional toll inflicted on our human monitors who have to look at hate content on a daily basis. As such, we constantly get in touch with them and offer support like counselling and encouraging them to journal daily. This is also something we need to be prepared with for the next year and in the longer term.

What do you plan to do with the data once this project concludes or pivots to other goals? What are your long-term plans for the tool?

WN: The first contribution involving the data would be academic. We are already thinking of many possibilities for research papers and projects from many angles, and I think this is critical in the development of our knowledge and analysis of the issue.

The second would be for advocacy. Evidence-based advocacy is all about combining data and real-life experiences to further issues and push for change. We can share this data with the platforms to show that there are dangerous narratives that they can help suppress or mitigate. We can also share this data with the government to show the patterns, as well as use this information to create initiatives to push back against these hate speeches.

We will be looking into this in more detail this year, as well as setting up a multi-stakeholder engagement where data engagement would be critical.

LSF: This reminds me of the Netflix documentary, The Social Dilemma. One of the fostering programmes mentioned in the documentary used a model where it managed to convert people who have so much hate, towards seeing and accepting other people’s perspectives. Just thinking out aloud about the possibilities.

Can this tool be adapted by other organisations or outside Malaysia, or will it have to be built from scratch if someone wanted to replicate it?

WN: The tool is still being tested and may not be ready to be adapted by other organisations. We are however happy to share the framework, severity threshold, and rules in case any organisation is interested in developing it further.

What can you say about the future of the ‘Say No to Hate Speech’ project? And how can this project extend the possibilities of civil society and citizens in combating hate speech?

WN: There are so many possibilities for the tool in the future, as well as for the website, the platforms and the partnerships. However, we are scraping the bottom of the barrel. There is so much more to do and we cannot be just concentrated within a few organisations. We need more collaboration. Once we are done with data gathering, we will work on how to make this granular data accessible to all CIJ partners so they can use it for their own advocacy. Beyond that, we need to make use of the attention this project has gained by creating more conversations around hate speech and why it should not be tolerated.

Beyond that, we need to think about the possibilities within the context of working with this new unity government. After the political coup in 2018, we are cautious amidst hope. We need to think about how to work strategically with this new unity government, and push them for as many reforms as possible, especially concerning human rights and freedom of expression.

Read part one of this interview here.

Also see CIJ's Resource Guide: Say No to Hate Speech.