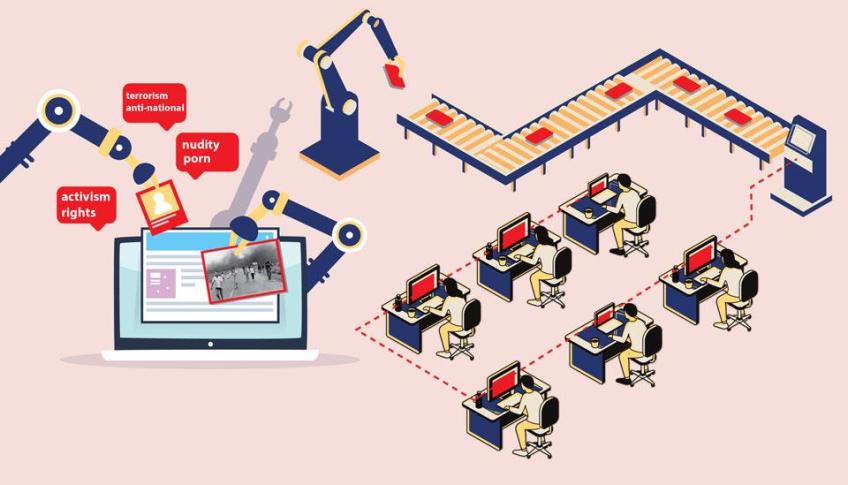

Content moderation online is currently done by most social media companies through a mix of automation (or what is sometimes referred to as artificial intelligence or AI) and human moderators. Automation deals effectively with certain kinds of content such as sexually explicit images, child pornography, terrorism-related content – but it is not a foolproof system. Here human intervention and oversight are needed, especially when it comes to the meaning of what is being said in comments or conversations online.

What is becoming increasingly evident is that the choice is not between the alleged neutrality of the impersonal machine and the errors and finiteness of human moderation, as both work in tandem.

Perspectives on the use of AI and humans in content moderation

“Cheap female labour is the engine that powers the internet” - Lisa Nakamura

“… social media platforms are already highly regulated, albeit rarely in such a way that can be satisfactory to all.” - Sarah Roberts

What makes any content platform viable is directly linked to the question of what makes you viable as content. Sarah Roberts, through a series of articles, shows how the moderation practices of social media giants all operate in accordance with a complex web of nebulous rules and procedural opacity. Roberts describes content moderation as dealing with “digital detritus” and also compares cleaning up the social media feed with how garbage is dumped by high-income countries on low- and middle-income countries (for instance, the dumping of shipping containers full of garbage by Canada onto the Philippines). She adds that there has been "significant equivocation regarding which practices may be automated through artificial intelligence, filters and others kinds of computational mechanisms versus what portion of the tasks are the responsibility of human professionals." Roberts also adds that in May 2017, Facebook added 3,000 moderators to its global workforce of 4,500 already.

Continue reading at GenderIT.org.